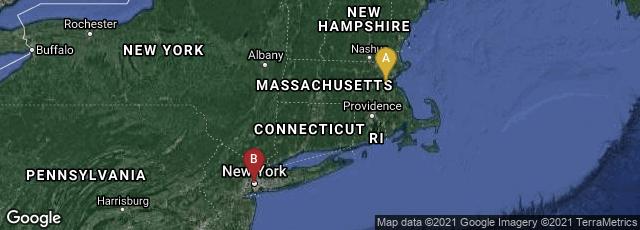

A: Cambridge, Massachusetts, United States, B: Manhattan, New York, New York, United States

On December 11, 2015 Brenden M. Lake, Rusian Salakhutdinov, and Joshua B. Tenenbaum, from MIT and New York University reported advances in artificial intelligence that surprassed human capabilities in reading and copying written characters. The key advance was that the algorithm outperformed humans in identifying written characters based on a single example. Until this time, machine learning algorithms typically required tens or hundres of examples to perform with similar accuracy.

Lake, Salakutdinov, Tenebaum, "Human-level concept learning through probabilistic program induction", Science, 11 December 2015, 350, no. 6266, 1332-1338. On December 14, 2015 the entire text of this extraordinary paper was freely available online. I quote the first 3 paragraphs:

"Despite remarkable advances in artificial intelligence and machine learning, two aspects of human conceptual knowledge have eluded machine systems. First, for most interesting kinds of natural and man-made categories, people can learn a new concept from just one or a handful of examples, whereas standard algorithms in machine learning require tens or hundreds of examples to perform similarly. For instance, people may only need to see one example of a novel two-wheeled vehicle (Fig. 1A) in order to grasp the boundaries of the new concept, and even children can make meaningful generalizations via “one-shot learning” (1–3). In contrast, many of the leading approaches in machine learning are also the most data-hungry, especially “deep learning” models that have achieved new levels of performance on object and speech recognition benchmarks (4–9). Second, people learn richer representations than machines do, even for simple concepts (Fig. 1B), using them for a wider range of functions, including (Fig. 1, ii) creating new exemplars (10), (Fig. 1, iii) parsing objects into parts and relations (11), and (Fig. 1, iv) creating new abstract categories of objects based on existing categories (12, 13). In contrast, the best machine classifiers do not perform these additional functions, which are rarely studied and usually require specialized algorithms. A central challenge is to explain these two aspects of human-level concept learning: How do people learn new concepts from just one or a few examples? And how do people learn such abstract, rich, and flexible representations? An even greater challenge arises when putting them together: How can learning succeed from such sparse data yet also produce such rich representations? For any theory of learning (4, 14–16), fitting a more complicated model requires more data, not less, in order to achieve some measure of good generalization, usually the difference in performance between new and old examples. Nonetheless, people seem to navigate this trade-off with remarkable agility, learning rich concepts that generalize well from sparse data.

"This paper introduces the Bayesian program learning (BPL) framework, capable of learning a large class of visual concepts from just a single example and generalizing in ways that are mostly indistinguishable from people. Concepts are represented as simple probabilistic programs— that is, probabilistic generative models expressed as structured procedures in an abstract description language (17, 18). Our framework brings together three key ideas—compositionality, causality, and learning to learn—that have been separately influential in cognitive science and machine learning over the past several decades (19–22). As programs, rich concepts can be built “compositionally” from simpler primitives. Their probabilistic semantics handle noise and support creative generalizations in a procedural form that (unlike other probabilistic models) naturally captures the abstract “causal” structure of the real-world processes that produce examples of a category. Learning proceeds by constructing programs that best explain the observations under a Bayesian criterion, and the model “learns to learn” (23, 24) by developing hierarchical priors that allow previous experience with related concepts to ease learning of new concepts (25, 26). These priors represent a learned inductive bias (27) that abstracts the key regularities and dimensions of variation holding across both types of concepts and across instances (or tokens) of a concept in a given domain. In short, BPL can construct new programs by reusing the pieces of existing ones, capturing the causal and compositional properties of real-world generative processes operating on multiple scales.

I"n addition to developing the approach sketched above, we directly compared people, BPL, and other computational approaches on a set of five challenging concept learning tasks (Fig. 1B). The tasks use simple visual concepts from Omniglot, a data set we collected of multiple examples of 1623 handwritten characters from 50 writing systems (Fig. 2)(see acknowledgments). Both images and pen strokes were collected (see below) as detailed in section S1 of the online supplementary materials. Handwritten characters are well suited for comparing human and machine learning on a relatively even footing: They are both cognitively natural and often used as a benchmark for comparing learning algorithms. Whereas machine learning algorithms are typically evaluated after hundreds or thousands of training examples per class (5), we evaluated the tasks of classification, parsing (Fig. 1B, iii), and generation (Fig. 1B, ii) of new examples in their most challenging form: after just one example of a new concept. We also investigated more creative tasks that asked people and computational models to generate new concepts (Fig. 1B, iv). BPL was compared with three deep learning models, a classic pattern recognition algorithm, and various lesioned versions of the model—a breadth of comparisons that serve to isolate the role of each modeling ingredient (see section S4 for descriptions of alternative models). We compare with two varieties of deep convolutional networks (28), representative of the current leading approaches to object recognition (7), and a hierarchical deep (HD) model (29), a probabilistic model needed for our more generative tasks and specialized for one-shot learning."